🎓 Deep Dive into Transformer (GEMORNA) for mRNA Design

Image credit: Nano Banana

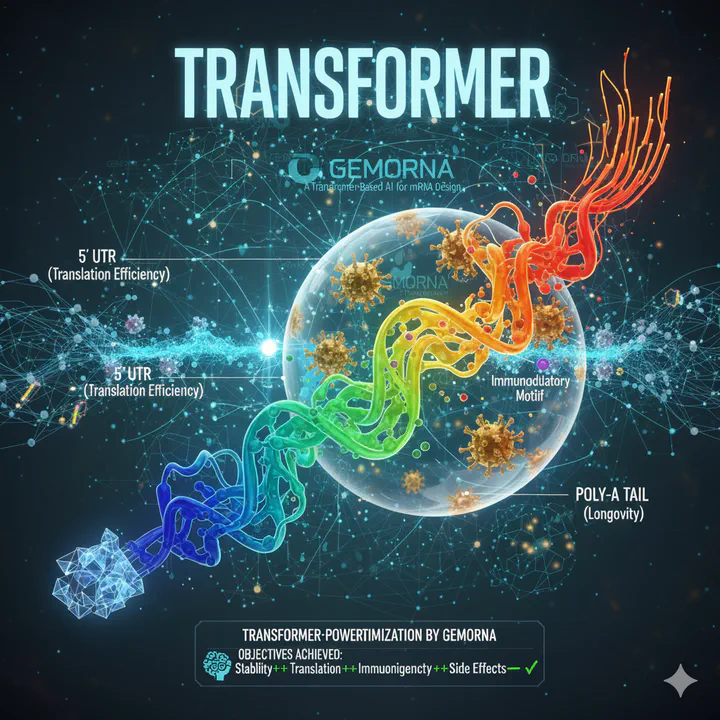

Image credit: Nano BananaThe GEMORNA family of models represents a sophisticated approach to computational mRNA design, demonstrating how transformer architectures can be leveraged for both coding sequence (CDS) generation and untranslated region (UTR) design. This comprehensive exploration reveals not only the technical foundations of these models but also their surprising emergent capabilities that arise during inference.

Part I: GEMORNA-UTR - Autoregressive Design of Untranslated Regions

What is GEMORNA-UTR?

GEMORNA-UTR is a specialized model designed for generating untranslated regions (UTRs) of mRNA. UTRs are crucial regulatory sequences that appear before (5’ UTR) or after (3’ UTR) the coding sequence in mRNA molecules. While these regions don’t encode proteins themselves, they play a vital role in determining how efficiently and stably an mRNA is translated into proteins.

Why Use a Decoder-Only Transformer?

The choice of a decoder-only Transformer architecture for GEMORNA-UTR is particularly strategic. Unlike protein-coding sequences (CDSs) that have a clear “source” sequence (the protein), UTRs don’t have such predetermined templates. This makes them ideal candidates for de novo design—creating entirely new sequences from scratch.

A decoder-only Transformer excels at this task through autoregressive generation: it generates sequences step by step, predicting the next nucleotide based on all previously generated nucleotides. This approach is fundamentally different from encoder-decoder architectures used in GEMORNA for CDSs, where there’s a clear source-to-target translation relationship.

Understanding Autoregressive Generation

Autoregressive generation means the model generates one element at a time, using previously generated elements as context. In GEMORNA-UTR’s case:

- The model starts with a start-of-sequence (SOS) token

- It predicts the next nucleotide based on the SOS token

- The newly predicted nucleotide is added to the sequence

- The process repeats, with each new prediction based on all previous nucleotides

- Generation continues until the complete UTR sequence is formed

This sequential dependency allows the model to learn complex patterns like motifs and secondary structures that naturally occur in functional UTRs.

Training Strategy: Pre-training + Fine-tuning

GEMORNA-UTR employs a two-stage training approach that balances natural sequence patterns with functional optimization.

Pre-training Phase

Goal: Teach the model general patterns and rules of natural UTR sequences.

- Input: Natural UTR sequences tokenized as [SOS, N₁, N₂, …, Nₙ]

- Output: Probability distribution over the next nucleotide at each position

- Loss Function: Cross-entropy loss between predicted probabilities and actual nucleotides

- Training Strategy: Teacher-forcing with autoregressive prediction

During pre-training, the model learns the fundamental “language” of UTRs—including nucleotide distributions, common motifs, and structural patterns—without any bias toward specific functional outcomes.

$$ \text{Loss} = -\sum_{t=1}^{L} \log P(\text{true nucleotide at position } t \mid \text{all previous tokens}) $$Fine-tuning Phase

Goal: Specialize the model to generate UTRs with higher translation efficiency or stability.

- Input: Curated UTR sequences selected for high translation efficiency/stability

- Output: Same autoregressive nucleotide prediction

- Loss Function: Identical cross-entropy loss as pre-training

- Key Difference: Training data is carefully selected for functional performance

Fine-tuning doesn’t change the model architecture or loss function. Instead, by training on sequences with desired properties, it nudges the model to prefer nucleotides and patterns associated with better efficiency and stability.

Teacher-Forcing in Training

Teacher-forcing is a crucial training technique used in both pre-training and fine-tuning phases. During training, instead of feeding the model’s own predicted nucleotide as the next input, the system provides the true nucleotide from the training sequence.

Training vs Inference

During Training (with Teacher-forcing):

- Input: [SOS] → predict N₁

- Input: [SOS, N₁] → predict N₂ (using true N₁)

- Input: [SOS, N₁, N₂] → predict N₃ (using true N₁, N₂)

During Inference (without Teacher-forcing):

- Input: [SOS] → predict N₁

- Input: [SOS, N₁_predicted] → predict N₂

- Input: [SOS, N₁_predicted, N₂_predicted] → predict N₃

Teacher-forcing stabilizes training by ensuring the model always sees correct context, while autoregression manifests fully during actual sequence generation.

Key Differences: Pre-training vs Fine-tuning

| Aspect | Pre-training | Fine-tuning |

|---|---|---|

| Training Data | All natural UTRs | High-efficiency/stability UTRs |

| Purpose | Learn general sequence patterns | Bias toward functional properties |

| Autoregression | Yes (teacher-forced) | Yes (teacher-forced) |

| Loss Function | Cross-entropy | Cross-entropy |

| Focus | Natural sequence “language” | Functional performance |

Why This Approach Matters

The combination of pre-training and fine-tuning enables GEMORNA-UTR to generate novel UTR sequences that:

- Follow natural sequence patterns: The pre-training ensures generated sequences look realistic and follow biological constraints

- Optimize for functionality: Fine-tuning biases the model toward sequences with high translation efficiency and stability

- Enable therapeutic applications: This dual advantage makes the model particularly valuable for mRNA therapeutics and vaccine development

The autoregressive nature of the generation process allows for flexible, de novo UTR design while maintaining biological plausibility—a crucial balance for practical applications in biotechnology and medicine.

Part II: GEMORNA for Coding Sequences - Emergent Translational Efficiency

The Unexpected Discovery

Recent findings with GEMORNA reveal a fascinating emergent property: the model can generate coding sequences (CDSs) with enhanced translational efficiency despite never being explicitly trained to optimize for it. This discovery sheds light on how decoding strategies can unlock hidden capabilities in sequence generation models.

Key features of GEMORNA-generated CDSs, such as the codon adaptation index (CAI), increased progressively across different decoding strategies. Starting from unbiased sampling, moving to biased sampling, and further improving with greedy and beam search methods. This progression suggests that although GEMORNA was trained on natural CDSs, it can generate sequences that deviate from natural ones in ways that may enhance translational efficiency.

Training vs. Inference: A Critical Distinction

The improvement in translational efficiency metrics occurs not during training, but during inference through the choice of decoding strategy. Here’s the key insight:

Training Phase: GEMORNA uses a standard cross-entropy loss function between predicted codon probabilities and ground-truth natural CDS tokens. The model learns to reproduce natural CDS sequences—there’s no explicit optimization for CAI or other efficiency-related metrics.

Inference Phase: The magic happens when different decoding strategies are applied. These methods introduce varying degrees of bias toward high-probability codons, which creates the observed improvements in translational efficiency.

How Decoding Strategies Create the Effect

Unbiased Sampling

In unbiased sampling, each codon is selected according to the raw softmax probability distribution from the model. While high-probability codons are more likely to be chosen, low-probability codons can still appear frequently, keeping CAI relatively low.

Biased Sampling

Methods like top-k, top-p, or temperature scaling restrict sampling to the most probable codons. This effectively cuts off low-probability codons, which often correspond to rare or suboptimal codons for translation. The result is more frequent codon usage leading to higher CAI.

Greedy Search

This deterministic approach always selects the single most probable codon at each step. It strongly favors the most common codons from the training set, producing sequences with significantly increased CAI.

Beam Search

By maintaining multiple high-probability candidate sequences and selecting the best overall based on cumulative probability, beam search tends to maximize global likelihood. This often results in the highest CAI among all strategies because the generated CDS consistently uses the most frequent codons.

The Underlying Mechanism

The key to understanding this phenomenon lies in the relationship between codon frequency and translational efficiency:

Training Data Patterns: During training, the model learns probability distributions from natural CDS sequences where frequent codons are typically those that organisms prefer for optimal translation.

CAI Correlation: The Codon Adaptation Index measures how well a sequence uses these preferred codons. Frequent codons in training data often correspond to higher CAI values.

Emergent Optimization: When decoding methods favor high-probability codons, they indirectly select for preferred codons, resulting in improved translational efficiency metrics.

Implications for Synthetic Biology

These emergent properties have significant implications for synthetic biology applications. The GEMORNA framework demonstrates that:

- Implicit Optimization: Models can achieve desired biological properties without explicit objective functions

- Decoding Strategy Selection: The choice of inference method becomes a crucial design decision for synthetic sequence generation

- Natural vs. Enhanced: AI models can generate sequences that improve upon natural examples while maintaining biological validity

- Comprehensive mRNA Design: By combining UTR design with CDS generation, researchers can optimize entire mRNA molecules for therapeutic applications

Conclusion

GEMORNA represents a sophisticated approach to computational mRNA design, leveraging the strengths of decoder-only Transformers for both autoregressive UTR generation and protein-guided CDS synthesis. By combining broad biological knowledge from pre-training with functional optimization through fine-tuning and strategic decoding, it offers a powerful toolkit for designing mRNA sequences that are both biologically realistic and functionally optimized.

GEMORNA’s ability to generate sequences with enhanced translational efficiency through decoding strategies alone represents a compelling example of emergent AI capabilities. This finding suggests that the path to better synthetic biology tools may not always require complex multi-objective optimization—sometimes, the right inference strategy can unlock desired properties that are already latent in the learned representations.

The progressive improvement from unbiased sampling to beam search demonstrates that the model has internalized the relationship between codon usage patterns and translational efficiency, even though this was never an explicit training objective. This opens new avenues for exploring what other biological properties might emerge from different decoding approaches.

The model’s success demonstrates how modern deep learning architectures can be adapted to address specific challenges in computational biology, opening new avenues for rational design of therapeutic mRNAs that optimize both regulatory regions and coding sequences simultaneously.